AgentOps Monitor

Enhances LLM agent reliability by tracking interactions, tool usage, and errors in real-time.

Trusted by

Developed using industry-leading observability frameworks, AgentOps Monitor has been successfully implemented in various enterprise environments, ensuring reliability and scalability.

Success Story

AppFolio enhanced its LLM application performance by integrating Datadog LLM Observability, leading to improved model quality and faster feature expansion

Integrates with

Problem

Managing the performance and reliability of LLM agents can be challenging due to their complex and dynamic nature. Without proper observability, issues such as unexpected behaviors, tool misuses, and errors can go undetected, leading to degraded user experiences and increased operational costs.

Solution

AgentOps Monitor addresses these challenges by providing comprehensive observability into LLM agents. It offers real-time tracking of interactions, tool usage, and error logging, enabling teams to gain deep insights into agent behavior and performance. This proactive approach allows for swift identification and resolution of issues, ensuring optimal agent performance.

Result

Users can expect improved agent reliability, faster issue resolution times, and enhanced user satisfaction. By proactively monitoring agent activities, teams can maintain high-quality interactions and reduce operational disruptions.

Use Cases

AgentOps Monitor is a robust observability solution designed to provide end-to-end visibility into Large Language Model (LLM) agents. It tracks every interaction, monitors tool usage, and captures errors in real-time, ensuring that AI agents operate efficiently and reliably. By integrating seamlessly with existing workflows, AgentOps Monitor empowers teams to proactively identify and resolve issues, optimize performance, and maintain high-quality user experiences.

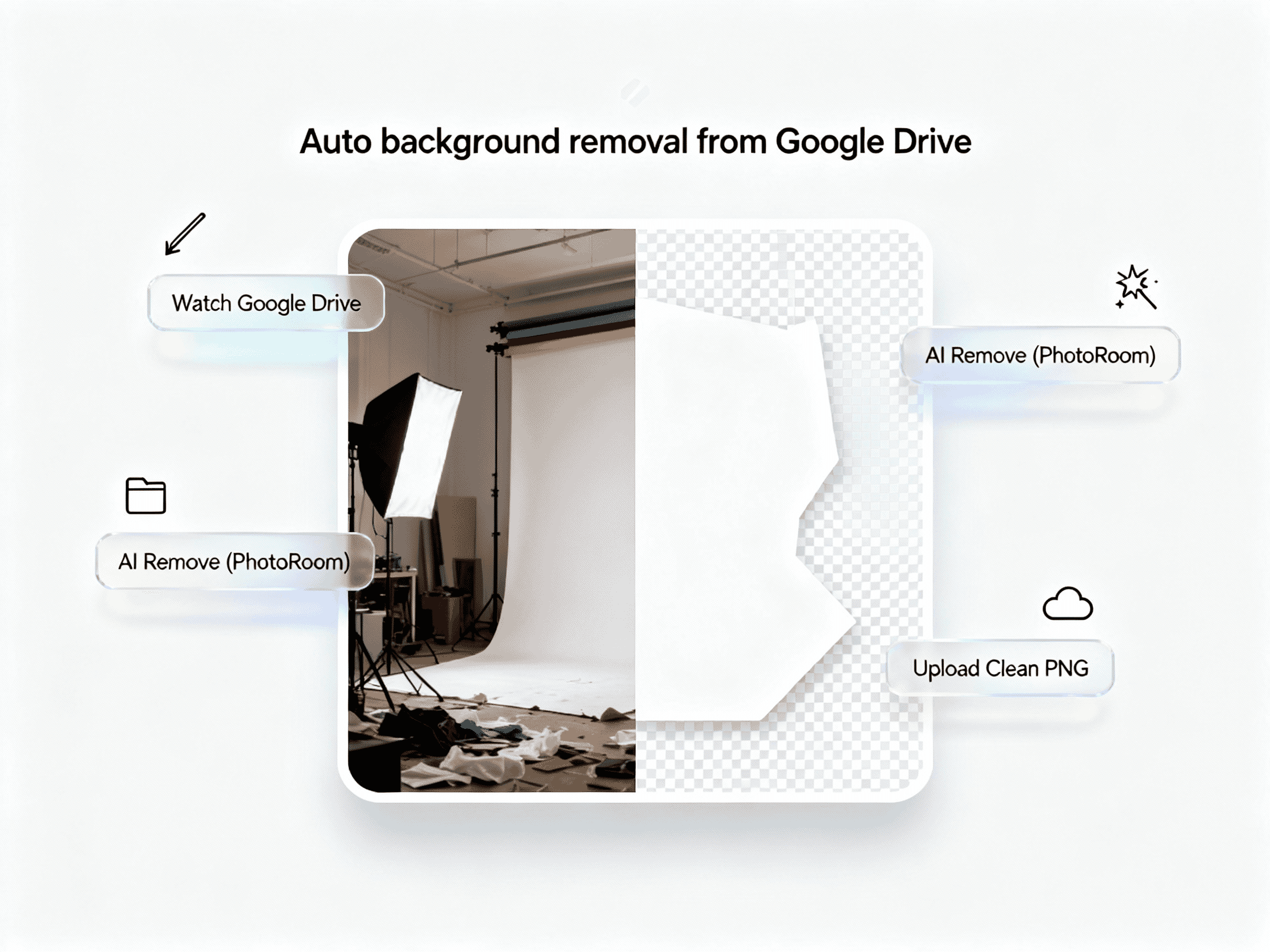

Integrations

Connect to your existing tools seamlessly

Technology Stack

Automation

Automation

Infrastructure

Implementation Timeline

Requirement Analysis

3–5 daysGather detailed requirements from stakeholders, define objectives, and outline monitoring and observability needs for LLM agents.

System Design

4–6 daysArchitect the solution, design data flows, monitoring dashboards, and integration points with LLMs and third-party platforms.

Development & Integration

1–2 weeksImplement the monitoring system, connect to APIs, build dashboards, set up automated logging, and integrate with workflow tools (n8n, Make.com).

Testing & Quality Assurance

3–5 daysPerform functional, integration, and stress testing to ensure error logging, metrics collection, and alerts work as expected.

Deployment

2–3 daysDeploy the agent monitoring system to production environments, configure cloud hosting, and verify end-to-end operation.

Post-Deployment Support

OngoingProvide ongoing support, monitoring, and updates. Train teams on dashboards and best practices for LLM observability.

Support Included

Comprehensive documentation with step-by-step workflow setup, API configuration guides, integration instructions. Optional onboarding call and email support during the launch phase.